One thing that I see a lot of these days is all sorts of things like this blog or this book telling you to do your own thing, do your own projects, branch out on your own, take initiative, etc. Whenever I read these things, I can't help but find myself inspired by them: I enjoy doing my own projects, and I would love to be able to just work on them all day and maybe someday be able to live off them.

So here is my first try. I'm launching a project (it's too small for me to consider it a startup) called Your 30 Before 30 that might be a little fun to do. The idea behind it is to create a "30 before 30" list (similar to a bucket list), where you write down 30 things you want to do before you're 30. They don't have to be anything special, but they can be. Then at some point when you accomplish what you want to accomplish, you mark it off and tell people what you did.

I'm putting it here to invite any of my readers to check it out and "beta" test it. Let me know what you think. What's cool, what's not? Do you have any suggestions/constructive criticisms? Any feedback is welcome.

Dec 23, 2010

Dec 7, 2010

Second Thoughts on IronRuby

Unfortunately after using IronRuby at work a bit, I've found that there are still a few too many bugs with the thing for me to want to depend on it for production software. The bugs are already reported, however they are tagged as low priority and will not likely be fixed any time soon. Rather than spending lots of time fixing the bugs myself (I get paid to make profit, not to work on open-source pet projects), I'll just continue doing things the way that I have been!

Hopefully someone else will find IronRuby useful!

In case you're wondering, the bug that really killed it for me was the inability to use gems with RVM under Linux. The following command fails with issues installing RubyGems:

Hopefully someone else will find IronRuby useful!

In case you're wondering, the bug that really killed it for me was the inability to use gems with RVM under Linux. The following command fails with issues installing RubyGems:

rvm install ironrubyNo Rubygems drastically reduces the usefulness of Ruby, enough that I'm not really wanting to use it anymore.

Dec 3, 2010

Getting Your Files

I suppose I'm talking to the wrong group of people here, but I decided to write a quick post about how to access anybody's files without needing their username/password for their computer.

It's quite simple:

1) Put in an Ubuntu LiveCD/LiveUSB

2) Boot off it

3) Access their files by going to Places -> X GB/TB Volume

This doesn't work if they have any sort of encryption on their drives or a BIOS password, but otherwise you can do whatever you feel like! So if you have a laptop or a machine that is easily reachable by random people, then you should probably secure your files from something like this if there are things on there that you don't really want people getting to.

It's quite simple:

1) Put in an Ubuntu LiveCD/LiveUSB

2) Boot off it

3) Access their files by going to Places -> X GB/TB Volume

This doesn't work if they have any sort of encryption on their drives or a BIOS password, but otherwise you can do whatever you feel like! So if you have a laptop or a machine that is easily reachable by random people, then you should probably secure your files from something like this if there are things on there that you don't really want people getting to.

Dec 2, 2010

Embedding a Ruby REPL in a .NET App

I've finally gotten fed up with using VB.NET as a scripting language at work, so I decided to try dropping an IronRuby interpreter into the system. It's fairly simple to do, I'll describe here how to build a REPL within your app.

Step 1: Make a window for the REPL. Give it a box for your input and a box for your output. How you do all this is up to you, the interesting part is how to use Ruby within it.

Step 2: Initialize the scripting engine. You need a config file, I call it ironruby.config:

Now the code to load in your Ruby stuff. Initialize a few objects:

This code could be a little bit more robust, so make sure to handle exceptions nicely. An exception will be thrown if say the syntax is invalid, or there are other problems.

The nice thing about this is that you can tinker with the classes within your own application. In the REPL if you put:

Step 1: Make a window for the REPL. Give it a box for your input and a box for your output. How you do all this is up to you, the interesting part is how to use Ruby within it.

Step 2: Initialize the scripting engine. You need a config file, I call it ironruby.config:

<?xml version="1.0" encoding="utf-8" ?>

<configuration>

<configSections>

<section name="microsoft.scripting" type="Microsoft.Scripting.Hosting.Configuration.Section, Microsoft.Scripting,

Version=1.0.0.5000, Culture=neutral, PublicKeyToken=31bf3856ad364e35" requirePermission="false" />

</configSections>

<microsoft.scripting>

<languages>

<language names="IronRuby;Ruby;rb" extensions=".rb" displayName="IronRuby 1.0" type="IronRuby.Runtime.RubyContext, IronRuby,

Version=1.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" />

</languages>

</microsoft.scripting>

</configuration>You need to make sure you have the DLLs available that IronRuby comes with. Just put them in the same folder as your app.Now the code to load in your Ruby stuff. Initialize a few objects:

var runtime = new ScriptRuntime(ScriptRuntimeSetup.ReadConfiguration("ironruby.config"));

var engine = runtime.GetEngine("Ruby");

// create a scope - you need to do this in order to preserve variables declared across executions

var globalScope = engine.CreateScope();

// I'm assuming you have a textbox or something named command, just adjust

// this to what you want if you have something else

var result = engine.CreateScriptSourceFromString("(" + command.Text + ").inspect").Execute(globalScope); And, jackpot! The result variable is a string that is the result of whatever is in the command.Text variable.This code could be a little bit more robust, so make sure to handle exceptions nicely. An exception will be thrown if say the syntax is invalid, or there are other problems.

The nice thing about this is that you can tinker with the classes within your own application. In the REPL if you put:

# replace MyApp.exe with the .exe or .dll file that your app uses require "MyApp.exe"You can then access your namespaces and classes within your own app, and fiddle with them while the app is running!

Nov 22, 2010

Nov 17, 2010

Software Metrics and Instrumental Variables

I recently read this article about how software metrics are mostly useless and tend to cause more problems than they solve. This reminded me of a topic in stats which apparently has some application in software development.

The use of software metrics is an example of a statistical technique called instrumental variables. Often when you want to understand some phenomenon or relationship, you run into problems because many factors are unobservable. This means that they are not concrete things that you can stamp a number on to get a clear measurement of the factor. One example that constantly crops up in economics and software development is ability. A person's ability can have extremely strong effects on other factors such as productivity, wage, etc. However, you can't really come up with a solid measurement for a person's ability: suppose some programmer you know has an ability of 10. What's that mean?

Compare that to a metric like lines of code per hour. A measurement of 10 has a very clear and concrete meaning: given the changes that they made in the hour, the code they have produced contains 10 newlines.

This is where instrumental variables come in. An instrument is an observable variable that you use in place of the unobservable variable that satisfies two characteristics: it has to be correlated with the variable that it is standing in for, and it can't be correlated with random errors or other omitted factors. The power of the instrument is based on the strength of the correlation between the instrument and the unobserved variable. This is why people use things like lines of code per hour, years of experience, etc. etc. for attempting to measure the productivity of a programmer.

Unfortunately there are a number of shortcomings with the instrumental variables method. The biggest issue is finding a good instrument. We know the criteria required for a good instrumental variable (there's all sorts of math proofs that you can look up if you like), however that doesn't mean that there are any instruments that satisfy it. On top of that when dealing with people who know the metric you're using, they can perhaps attempt to cater to the metric - thus introducing a correlation between the instrument and other omitted variables like their ability to cater to metrics.

In short, the problem is not entirely with the method, but more with finding good instruments. Unfortunately if you can't find a good instrument, you'll have to resort to a different method. According to a stats professor of mine, one method for dealing with unobservable factors is to use what's called a mixture model. It supposedly works, however the procedure appears to be much more complicated and can be less precise than having a good instrument. I'm still working on figuring out how to do this sort of thing, perhaps I'll talk a bit more about it another day.

The use of software metrics is an example of a statistical technique called instrumental variables. Often when you want to understand some phenomenon or relationship, you run into problems because many factors are unobservable. This means that they are not concrete things that you can stamp a number on to get a clear measurement of the factor. One example that constantly crops up in economics and software development is ability. A person's ability can have extremely strong effects on other factors such as productivity, wage, etc. However, you can't really come up with a solid measurement for a person's ability: suppose some programmer you know has an ability of 10. What's that mean?

Compare that to a metric like lines of code per hour. A measurement of 10 has a very clear and concrete meaning: given the changes that they made in the hour, the code they have produced contains 10 newlines.

This is where instrumental variables come in. An instrument is an observable variable that you use in place of the unobservable variable that satisfies two characteristics: it has to be correlated with the variable that it is standing in for, and it can't be correlated with random errors or other omitted factors. The power of the instrument is based on the strength of the correlation between the instrument and the unobserved variable. This is why people use things like lines of code per hour, years of experience, etc. etc. for attempting to measure the productivity of a programmer.

Unfortunately there are a number of shortcomings with the instrumental variables method. The biggest issue is finding a good instrument. We know the criteria required for a good instrumental variable (there's all sorts of math proofs that you can look up if you like), however that doesn't mean that there are any instruments that satisfy it. On top of that when dealing with people who know the metric you're using, they can perhaps attempt to cater to the metric - thus introducing a correlation between the instrument and other omitted variables like their ability to cater to metrics.

In short, the problem is not entirely with the method, but more with finding good instruments. Unfortunately if you can't find a good instrument, you'll have to resort to a different method. According to a stats professor of mine, one method for dealing with unobservable factors is to use what's called a mixture model. It supposedly works, however the procedure appears to be much more complicated and can be less precise than having a good instrument. I'm still working on figuring out how to do this sort of thing, perhaps I'll talk a bit more about it another day.

Nov 16, 2010

Parse vs. TryParse

I was having a problem not too long ago where the performance of a specific bit of code was abysmally slow. I scanned through it to understand theoretically why it might be going slow, and then ran it through a profiler to see where it was spending most of its time. The results were very strange: most of the time was spent parsing strings into integers and decimals (for those who have never done .NET, they have a decimal type in addition to floats and doubles which are better for processing financial data - with decimals, 0.1 + 0.7 actually does equal 0.8). This was very odd, so I figured I'd look at it in more detail.

It turns out that in .NET, the regular Parse() functions throw an exception when the string is not well-formed. If the string is "N/A", then an exception is thrown. This is a common occurrence in the data I was receiving, so I was just catching the exception and setting things to null right away. The problem was that this code was being executed hundreds of times a second, the overhead from exception throwing was adding up!

In high-performance situations, you're better off using a function like TryParse(), which accepts the int/decimal variable as a reference and returns true if the parse was successful. This does not throw an exception and thus is much faster - a massive performance increase was noticed!

While my example here is with .NET, it applies to pretty much any language where a string to int/float method throws an exception when the string is malformed. If your language has something like TryParse, it is definitely recommended over Parse for high-performance situations!

Or an even better moral: don't throw exceptions in code that needs to be fast!

It turns out that in .NET, the regular Parse() functions throw an exception when the string is not well-formed. If the string is "N/A", then an exception is thrown. This is a common occurrence in the data I was receiving, so I was just catching the exception and setting things to null right away. The problem was that this code was being executed hundreds of times a second, the overhead from exception throwing was adding up!

In high-performance situations, you're better off using a function like TryParse(), which accepts the int/decimal variable as a reference and returns true if the parse was successful. This does not throw an exception and thus is much faster - a massive performance increase was noticed!

While my example here is with .NET, it applies to pretty much any language where a string to int/float method throws an exception when the string is malformed. If your language has something like TryParse, it is definitely recommended over Parse for high-performance situations!

Or an even better moral: don't throw exceptions in code that needs to be fast!

Nov 11, 2010

Why I Prefer Linux Servers

Say what you will about the quality of Linux servers vs. Windows servers, etc. etc. but here is one huge reason why Linux servers are better (all prices in USD):

Windows Server 2008 Licence with 5 users (not accessible through Remote Desktop): $1 029

To enable 5 users with Remote Desktop: $749

To add 5 more users: $199

To allow those 5 more to use Remote Desktop: $749

Ouch.

Windows Server 2008 Licence with 5 users (not accessible through Remote Desktop): $1 029

To enable 5 users with Remote Desktop: $749

To add 5 more users: $199

To allow those 5 more to use Remote Desktop: $749

Ouch.

Nov 7, 2010

Writing Blog Posts

Supposedly this month is NaBloPoMo, which means "National Blog Posting Month", where you are supposed to post one article a day for a month. Besides already having failed at it, I'm not sure how I feel about this idea. You shouldn't write a post just for the sake of writing a post, you should have a reason to write it. So I've decided to write about some of the reasons why I post:

1) There's something that interests me. Typically this is something science-y or math-y or computer-y or whatever. This is the #1 reason to post, since you can actually write good articles this way - when you're interested in something, you tend to put a fair bit more effort into the post than you would otherwise. On top of that if something interests you then chances are it will interest other folks, so it's a good way to build up a readership which in turn can give you more ideas through comment feedback, link sharing, etc.

2) I've found some information that I want to share with people. This is usually little updates like FireSheep or little howtos like installing Rubygems on Ubuntu. For the latter it is usually, "I've figured out how to do X with technology Y (ie. Ubuntu, Ruby), it was a pain in the ass so this is how to do it the easy way." Interestingly enough, the traffic generated by these kinds of posts is the majority of the traffic of this blog. This type of post is good if you want a steady stream of traffic and if you feel all warm inside when people leave comments saying "thank you so much!"

3) Jokes I've thought of. These ones are rare since I'm not much of a funny guy, but maybe you can come up with this type of thing more often!

Anyway that pretty much makes up most of my reasons for posting. The final reason is rants, but I think the Internet is full enough of that kind of post, so I won't encourage it here - however I will probably still end up occasionally ranting now and then...

So for those of you wanting to do NaBloPoMo, I hope this helps!

1) There's something that interests me. Typically this is something science-y or math-y or computer-y or whatever. This is the #1 reason to post, since you can actually write good articles this way - when you're interested in something, you tend to put a fair bit more effort into the post than you would otherwise. On top of that if something interests you then chances are it will interest other folks, so it's a good way to build up a readership which in turn can give you more ideas through comment feedback, link sharing, etc.

2) I've found some information that I want to share with people. This is usually little updates like FireSheep or little howtos like installing Rubygems on Ubuntu. For the latter it is usually, "I've figured out how to do X with technology Y (ie. Ubuntu, Ruby), it was a pain in the ass so this is how to do it the easy way." Interestingly enough, the traffic generated by these kinds of posts is the majority of the traffic of this blog. This type of post is good if you want a steady stream of traffic and if you feel all warm inside when people leave comments saying "thank you so much!"

3) Jokes I've thought of. These ones are rare since I'm not much of a funny guy, but maybe you can come up with this type of thing more often!

Anyway that pretty much makes up most of my reasons for posting. The final reason is rants, but I think the Internet is full enough of that kind of post, so I won't encourage it here - however I will probably still end up occasionally ranting now and then...

So for those of you wanting to do NaBloPoMo, I hope this helps!

Oct 25, 2010

FireSheep

I just discovered a Firefox plugin called FireSheep, which sniffs the local wireless network for session cookies to common sites and allows you to sign in as anybody it finds. This is a trivial hack, and anybody with an understanding of wireless networks and how session cookies work can figure it out - and it's fairly easy to explain to anybody who doesn't have an understanding of them.

I dug a bit into their code, they have easy access to these sites:

- Amazon (which saves your credit card info, so they could potentially buy stuff on your card)

- DropBox (lots of people use this one to backup files, this would give you access to all their stuff)

- Facebook

- Github

- Google (fortunately Gmail has an option to always use https)

- Live (aka Hotmail)

- Twitter

- and many more!

So long story short, remember that if you're using your laptop on a public network you should be using https:// and not http://.

This reminds me of a program I found that is kinda neat called STunnel. This program creates a tunnel using SSL to any server that supports an SSL connection. So if you have a program that can't use SSL for whatever reason, you can just connect it to the STunnel service on your local machine and have it forward, encrypted, to wherever you are trying to connect.

I dug a bit into their code, they have easy access to these sites:

- Amazon (which saves your credit card info, so they could potentially buy stuff on your card)

- DropBox (lots of people use this one to backup files, this would give you access to all their stuff)

- Github

- Google (fortunately Gmail has an option to always use https)

- Live (aka Hotmail)

- and many more!

So long story short, remember that if you're using your laptop on a public network you should be using https:// and not http://.

This reminds me of a program I found that is kinda neat called STunnel. This program creates a tunnel using SSL to any server that supports an SSL connection. So if you have a program that can't use SSL for whatever reason, you can just connect it to the STunnel service on your local machine and have it forward, encrypted, to wherever you are trying to connect.

Oct 22, 2010

5 Weekends

Seems like a lot of people are excited that this month has 5 Fridays, Saturdays and Sundays. They say that it hasn't happened in <some large number> of years (every time I see it, the number is different).

Except, you know, January of this year. Or May last year. So, fail.

Maybe they all meant that there hasn't been any Octobers with 5 Fridays, Saturdays and Sundays (FSSs) in that many years. My answer: go go gadget Ruby! Here's a little script that will find the last October which had 5 FSSs:

Except, you know, January of this year. Or May last year. So, fail.

Maybe they all meant that there hasn't been any Octobers with 5 Fridays, Saturdays and Sundays (FSSs) in that many years. My answer: go go gadget Ruby! Here's a little script that will find the last October which had 5 FSSs:

i = 2009

# a month with 31 days will have 5 FSSs iff the first

# day of the month is a Friday

begin

day = Date.parse("#{i}-10-01")

i -= 1

end until day.wday == 5

puts day.yearTurns out the answer is....2004! Sure enough looking at the calendar for October 2004 it does indeed have 5 FSSs. Before that? 1999. Before that? 1993. I could go on but I'm getting bored of this.Oct 21, 2010

Building Webapps in No Time

One thing that I've been thinking about is very small web apps, and how to get them out there quickly without having to do a whole lot of work. These are some tips with some arguments behind them and may not work for you, but I'm assuming that you're all smart here anyway and can decide on your own whether or not you should listen to them.

This guide is mainly targeted towards developers who are interested in building their own apps, but others can probably benefit from some of these too.

Tip 1: Screw IE6. Supporting IE6 can be a down-the-road feature (or v2.0 feature, however you want to call it) but for now it's going to consume much more time than it's worth. Too many web companies say, "oh, but the 10-15% of people still using IE6 are so important to me that I need to double my front-end development time just to make sure my site works in their browser."

No.

Tip 2: Choose a technology that is quick for development. Anything with a build cycle is out - one of the major issues I have working with C# these days is that I have to compile my code and restart the app, which takes long enough to break my concentration and I have to spend time re-focusing before I can be productive again (it doesn't help that I'm a bit of a space cadet and it really doesn't take long for me to find something shiny and distracting while the code is compiling). With stuff like PHP, Ruby, Python, etc. I can make my change and do a quick Alt-Tab + Ctrl-F5 to see the updated change.

Note: this also applies to front-end stuff. CSS Frameworks like Blueprint and Javascript libraries like jQuery will help you out there.

Tip 3: Use other people's code. Package management systems (Rubygems, PyPI, PEAR, etc. - is there something like this for jQuery plugins?) have many many libraries available for you to use that are built by guys who have faced the same problems as you and have made their solutions available so that you don't have to reinvent the wheel. In economics it's called capital accumulation, and you're much better off using it.

Tip 4: Use low-risk hosting. The difference between low-risk and low-cost hosting is that if your idea turns out to suck (like most of mine do) then you don't actually lose anything, where a low-cost host will charge you say $4/month regardless of what happens. An example of such a host is NearlyFreeSpeech.NET, who doesn't charge you for hosting if nobody goes to your site. It is free to sign up and create sites, and you get charged solely on the bandwidth that you use - there are a couple gotchas, I've written about them before if you want more details.

In short, you can pay as little as $5 for the hosting in the initial stages, which is less than the cost of your celebration beer when you launch your site.

Tip 5: This one is going to be a bit touchy for some folks, but it will help you get the app launched sooner. Use Facebook Connect as your authentication system. Seriously. While not everybody is on Facebook and others have privacy concerns and don't really want to sign up on sites that use Facebook Connect, there are two good reasons from a business perspective to use it for your site:

1) You don't have to build your own authentication system (or if you are following guideline 3, you don't have to integrate a library like devise to handle it). You'll probably still need to keep a users table in your app, but you won't need to handle the annoying aspects of password retrieval, profile pics, friends management, etc.

To support Facebook Connect with PHP is simple:

If your app goes anywhere, then you can start thinking about supporting non-Facebook accounts. But for your initial launch you want to do the least possible work, and using something like Facebook Connect will help you achieve that goal.

Tip 6: Choose a design and stick with it. Reworking a design can be costly, especially if you're using a lot of Javascript. Do a single design and unless it is absolutely horrible, just stick with it and rework it further down the road once you have actually launched your site.

Of course, some of these tips don't always apply: if you're building an app targeted toward businesses then Facebook Connect isn't really an option. I'll repeat myself: it is important for you to judge how these points might influence the way you are doing things, and to act accordingly.

This guide is mainly targeted towards developers who are interested in building their own apps, but others can probably benefit from some of these too.

Tip 1: Screw IE6. Supporting IE6 can be a down-the-road feature (or v2.0 feature, however you want to call it) but for now it's going to consume much more time than it's worth. Too many web companies say, "oh, but the 10-15% of people still using IE6 are so important to me that I need to double my front-end development time just to make sure my site works in their browser."

No.

Tip 2: Choose a technology that is quick for development. Anything with a build cycle is out - one of the major issues I have working with C# these days is that I have to compile my code and restart the app, which takes long enough to break my concentration and I have to spend time re-focusing before I can be productive again (it doesn't help that I'm a bit of a space cadet and it really doesn't take long for me to find something shiny and distracting while the code is compiling). With stuff like PHP, Ruby, Python, etc. I can make my change and do a quick Alt-Tab + Ctrl-F5 to see the updated change.

Note: this also applies to front-end stuff. CSS Frameworks like Blueprint and Javascript libraries like jQuery will help you out there.

Tip 3: Use other people's code. Package management systems (Rubygems, PyPI, PEAR, etc. - is there something like this for jQuery plugins?) have many many libraries available for you to use that are built by guys who have faced the same problems as you and have made their solutions available so that you don't have to reinvent the wheel. In economics it's called capital accumulation, and you're much better off using it.

Tip 4: Use low-risk hosting. The difference between low-risk and low-cost hosting is that if your idea turns out to suck (like most of mine do) then you don't actually lose anything, where a low-cost host will charge you say $4/month regardless of what happens. An example of such a host is NearlyFreeSpeech.NET, who doesn't charge you for hosting if nobody goes to your site. It is free to sign up and create sites, and you get charged solely on the bandwidth that you use - there are a couple gotchas, I've written about them before if you want more details.

In short, you can pay as little as $5 for the hosting in the initial stages, which is less than the cost of your celebration beer when you launch your site.

Tip 5: This one is going to be a bit touchy for some folks, but it will help you get the app launched sooner. Use Facebook Connect as your authentication system. Seriously. While not everybody is on Facebook and others have privacy concerns and don't really want to sign up on sites that use Facebook Connect, there are two good reasons from a business perspective to use it for your site:

1) You don't have to build your own authentication system (or if you are following guideline 3, you don't have to integrate a library like devise to handle it). You'll probably still need to keep a users table in your app, but you won't need to handle the annoying aspects of password retrieval, profile pics, friends management, etc.

To support Facebook Connect with PHP is simple:

<?= $facebook->getLoginUrl() ?>2) Free advertising and market feedback. When people use your app, you can post stuff to their wall saying something like, "so-and-so did X on MyAwesomeApp.com!". Their friends will then see the post and (hopefully) say, "whoa sweet, let's go check that out." You can take advantage of Facebook's already-established network for marketing, or for learning what people have to say about your app.

If your app goes anywhere, then you can start thinking about supporting non-Facebook accounts. But for your initial launch you want to do the least possible work, and using something like Facebook Connect will help you achieve that goal.

Tip 6: Choose a design and stick with it. Reworking a design can be costly, especially if you're using a lot of Javascript. Do a single design and unless it is absolutely horrible, just stick with it and rework it further down the road once you have actually launched your site.

Of course, some of these tips don't always apply: if you're building an app targeted toward businesses then Facebook Connect isn't really an option. I'll repeat myself: it is important for you to judge how these points might influence the way you are doing things, and to act accordingly.

Oct 8, 2010

PHP Preprocessor

I've thought of a random cool project, but this time instead of starting out on it, getting halfway through and then abandoning it, I figure I'll put it up for public discussion where others can throw in their two cents, tell me if such a thing already exists, or tell me I'm a moron and that it's a stupid idea (actually there will be people who will always say this, regardless of what the idea is).

I want to add some sort of preprocessor to PHP, to extend the power of the language. It seems a bit silly to add a preprocessor to something that is already a preprocessor, but I figure it is more practical to build this thing as something that outputs a language that is already very widely supported - my main incentive for this project is to then use it for PHP sites and put onto servers that already support PHP without needing additional software.

My main inspiration for such a project is from Ruby on Rails. See, Rails has a lot of good ideas, but many of them are easily translatable to PHP and other languages. You can use PHP's __call(), __get() and __set() methods to mimic a lot of the things that Rails does - I do this in MinMVC for model objects already.

What I think is much more powerful is the ability to use code to manipulate classes (aka metaprogramming). In Ruby I can do stuff like this:

What this preprocessor would do is scan for files that end with .m.php and convert them to .php files. The project would involve writing a parser that would scan for preprocessor blocks and execute them. The code in the preprocessor blocks would also be PHP, so you can do whatever you like in there. The key difference would be the use of $this: if you're inside a class, $this would refer to a Class object that represents the current class. So you would write something like this:

Quick aside on the use of the extension: I use .m.php instead of .phpm or something because Apache has a tendency to just serve files if it doesn't recognize the extension, which is a security risk. If it ends with .m.php, Apache will just execute it as a PHP file and fire out a PHP error when it sees weird stuff.

I'm still thinking about the details as to how you might add methods to $this, so that people can write add-ons. It might look something like this:

I want to add some sort of preprocessor to PHP, to extend the power of the language. It seems a bit silly to add a preprocessor to something that is already a preprocessor, but I figure it is more practical to build this thing as something that outputs a language that is already very widely supported - my main incentive for this project is to then use it for PHP sites and put onto servers that already support PHP without needing additional software.

My main inspiration for such a project is from Ruby on Rails. See, Rails has a lot of good ideas, but many of them are easily translatable to PHP and other languages. You can use PHP's __call(), __get() and __set() methods to mimic a lot of the things that Rails does - I do this in MinMVC for model objects already.

What I think is much more powerful is the ability to use code to manipulate classes (aka metaprogramming). In Ruby I can do stuff like this:

class MyClass awesome_function_that_manipulates_MyClass ... endIn PHP you can't do this. While PHP has a reflection API (I haven't actually used it much, so I can't comment on how good it is) it appears to be a read-only API. You can get information about classes and methods, but you can't modify them. You can't stick random methods into a class at run-time (unless I haven't read the PHP Reflection documentation properly). So you can't do something like this in PHP:

class MyClass has_many :usersThe has_many method would add certain methods to MyClass to make it nice and easy to interact with the users that this class has. While you can mimic this functionality using some __call() hackery, I think adding some sort of metaprogramming capabilities would make this much simpler and more maintainable.

What this preprocessor would do is scan for files that end with .m.php and convert them to .php files. The project would involve writing a parser that would scan for preprocessor blocks and execute them. The code in the preprocessor blocks would also be PHP, so you can do whatever you like in there. The key difference would be the use of $this: if you're inside a class, $this would refer to a Class object that represents the current class. So you would write something like this:

class MyClass {

#begin

$this->has_many("users");

#endIt's not nearly as elegant as the Ruby version, but that's ok. What this would do is the PHP preprocessor would execute the code in between #begin and #end and output some sort of PHP code to affect MyClass, like add methods called getUsers(), addUser(...), etc.Quick aside on the use of the extension: I use .m.php instead of .phpm or something because Apache has a tendency to just serve files if it doesn't recognize the extension, which is a security risk. If it ends with .m.php, Apache will just execute it as a PHP file and fire out a PHP error when it sees weird stuff.

I'm still thinking about the details as to how you might add methods to $this, so that people can write add-ons. It might look something like this:

class_function has_many($what){

...

}Who knows. Any comments on this idea?

Oct 5, 2010

Sep 29, 2010

On C# and .NET

At my current job I've been using C# and VB.NET for development, which are two technologies that I had never used and really shied away from as they are "corporate" technologies. I always figured that C# was just like Java and since I hated Java when I was in university I would have the same reaction when trying out C# for the first time.

Turns out I was wrong. In a nutshell, I would describe C# as "Java done right". The extra features that come with C# are little things that make the language as a whole more pleasant to use, and doesn't make me want to cry when it takes forever to do something simple - as I usually felt when working with Java.

Here's a few of the things I like:

Type Inference - some people will probably hate my code. I use var everywhere. My code looks like Javascript! It's especially useful in foreach loops over dictionaries (aka hashes, I'm so used to using Ruby that I tend to overuse this class): whenever I want to iterate over the collection.

Functional Abstraction - this one is also known as anonymous functions. Check this one out:

Events - this is the observer pattern built into the language. I won't go too much into this as you can just learn about it from the Wikipedia page. This is actually something that would be useful in Ruby (probably not that hard to implement as a gem) and is implement in Rails.

There are a number of things that I don't really like - the system is closed and very much owned by Microsoft. While they have their community promise thing going that means they say they won't sue the Mono guys for reimplementing their platform, you never know when they might try to exercise their muscle.

A quick note on Mono: it's great. The executables it produces are binary compatible with Windows, so you can pull the Java-style compile-once-run-everywhere thing - build an executable with Mono in Ubuntu, and it will execute under Windows - provided you're not using any specific libraries. Compile a .dll on Windows with Visual Studio and you can use it just fine in Mono under Ubuntu. I'm impressed.

Turns out I was wrong. In a nutshell, I would describe C# as "Java done right". The extra features that come with C# are little things that make the language as a whole more pleasant to use, and doesn't make me want to cry when it takes forever to do something simple - as I usually felt when working with Java.

Here's a few of the things I like:

Type Inference - some people will probably hate my code. I use var everywhere. My code looks like Javascript! It's especially useful in foreach loops over dictionaries (aka hashes, I'm so used to using Ruby that I tend to overuse this class):

foreach (var pair in myHash){

...

}This way if my hash has some complicated type I don't need to put KeyValuePairFunctional Abstraction - this one is also known as anonymous functions. Check this one out:

CSV.Open("mycsv.csv", "r", row => {

.. do something to row

});This is valid C# code! And it works great! It isn't exactly the same as blocks in Ruby (break/next/redo/return don't work the same) but it accomplishes a lot of what I use blocks for.Events - this is the observer pattern built into the language. I won't go too much into this as you can just learn about it from the Wikipedia page. This is actually something that would be useful in Ruby (probably not that hard to implement as a gem) and is implement in Rails.

There are a number of things that I don't really like - the system is closed and very much owned by Microsoft. While they have their community promise thing going that means they say they won't sue the Mono guys for reimplementing their platform, you never know when they might try to exercise their muscle.

A quick note on Mono: it's great. The executables it produces are binary compatible with Windows, so you can pull the Java-style compile-once-run-everywhere thing - build an executable with Mono in Ubuntu, and it will execute under Windows - provided you're not using any specific libraries. Compile a .dll on Windows with Visual Studio and you can use it just fine in Mono under Ubuntu. I'm impressed.

Sep 24, 2010

Whiny Programmers

This post is in response to this rant here. If you don't feel like reading it that's ok, it is about a programmer who is annoyed about the following scenario:

1) Business Guy (BG) has an idea (I say Guy here because I've only ever been approached by guys with business pitches, but you can easily replace the G with Girl if that's the case).

2) BG finds Programmer (P), offers no pay but 50% equity.

3) P does all the coding, late nights, etc.

4) BG makes all the decisions, takes all the glory, no mention of P in press releases, etc.

5) P gets annoyed.

This is a common scenario, and a lot of programmers have seen it (I was lucky, when I went along with this scenario I was actually getting paid, although the equity part was lower).

My problem with this type of rant is this: what is stopping P and BG from being the same person? Why can't programmers come up with business ideas, and do all the marketing/PR/etc. as this rant describes as "the easy part"? This guy seems to come to the false dichotomy of "programmer in startup" vs. "programmer in big company", when there are plenty of other options available.

Now of course the obvious answer is this: there is not enough time for P and BG to be the same person. It's time-consuming enough to do all the coding, you want to do all the marketing and product pitches and all that too? On top of that, BG often has this thing called "charisma" (known to geeks as CHA) which is a thing that geeks often neglect or marginalize but is quite important when it comes to making people want to give you money for stuff (whether it's VC funding or selling your product).

Not only that but there is all this other business stuff that needs to be done like:

Market research: does anybody actually want your product? How often do us geeks embark on an awesome project only to find out that nobody actually wants it? /me raises both hands and would raise more if had more hands

Sales: how do you make more demand for what you're selling? People might not care at the moment, but could be convinced to care. How do you do that?

Financing: how are you going to pay the bills before the company turns a profit? Unemployment benefits? Not likely. Often you might have to get a loan or sell some equity to a VC/angel so that you can eat while you get the ball rolling.

Etc.: there are a lot of other things that come into play here, but I won't bore you with all those details since I think the three above are enough to make the point.

In short, my point here is that business is not as easy as this guy makes it sound.

What do you do when a business guy comes to you with a pitch? You might as well hear his idea out, it might actually be worth something - although if you're young like me your sense of what is worth something might not be fully developed yet, so keep that in mind as well. Sign the NDA if you need to, but don't make any commitment until you know what you're getting into. However the most important thing is to set a precedent: be assertive at the beginning. Say that if you're getting 50% of the equity then you're actually getting 50% of the company which includes 50% of the decision making, 50% of the exposure, etc.

Keep in mind though that this requires 50% of the responsibility - if the business fails, it's 50% your fault. If the business guy is doing something obviously stupid, it is your responsibility to let him know and work with him to make a better decision (or realize that maybe what he is doing isn't so stupid). You can't expect to be treated like a partner if you don't act like a partner.

Anyway, the two points I have are:

1) When you're in that situation where you're the developer of a 2-guy startup, assert your position as a partner or you won't be treated like one.

2) Remember that there are more options than "work as programmer for startup" or "work for big co" - namely, the "start your own startup" is a viable option. I think a lot of people forget about this when thinking about finding jobs.

1) Business Guy (BG) has an idea (I say Guy here because I've only ever been approached by guys with business pitches, but you can easily replace the G with Girl if that's the case).

2) BG finds Programmer (P), offers no pay but 50% equity.

3) P does all the coding, late nights, etc.

4) BG makes all the decisions, takes all the glory, no mention of P in press releases, etc.

5) P gets annoyed.

This is a common scenario, and a lot of programmers have seen it (I was lucky, when I went along with this scenario I was actually getting paid, although the equity part was lower).

My problem with this type of rant is this: what is stopping P and BG from being the same person? Why can't programmers come up with business ideas, and do all the marketing/PR/etc. as this rant describes as "the easy part"? This guy seems to come to the false dichotomy of "programmer in startup" vs. "programmer in big company", when there are plenty of other options available.

Now of course the obvious answer is this: there is not enough time for P and BG to be the same person. It's time-consuming enough to do all the coding, you want to do all the marketing and product pitches and all that too? On top of that, BG often has this thing called "charisma" (known to geeks as CHA) which is a thing that geeks often neglect or marginalize but is quite important when it comes to making people want to give you money for stuff (whether it's VC funding or selling your product).

Not only that but there is all this other business stuff that needs to be done like:

Market research: does anybody actually want your product? How often do us geeks embark on an awesome project only to find out that nobody actually wants it? /me raises both hands and would raise more if had more hands

Sales: how do you make more demand for what you're selling? People might not care at the moment, but could be convinced to care. How do you do that?

Financing: how are you going to pay the bills before the company turns a profit? Unemployment benefits? Not likely. Often you might have to get a loan or sell some equity to a VC/angel so that you can eat while you get the ball rolling.

Etc.: there are a lot of other things that come into play here, but I won't bore you with all those details since I think the three above are enough to make the point.

In short, my point here is that business is not as easy as this guy makes it sound.

What do you do when a business guy comes to you with a pitch? You might as well hear his idea out, it might actually be worth something - although if you're young like me your sense of what is worth something might not be fully developed yet, so keep that in mind as well. Sign the NDA if you need to, but don't make any commitment until you know what you're getting into. However the most important thing is to set a precedent: be assertive at the beginning. Say that if you're getting 50% of the equity then you're actually getting 50% of the company which includes 50% of the decision making, 50% of the exposure, etc.

Keep in mind though that this requires 50% of the responsibility - if the business fails, it's 50% your fault. If the business guy is doing something obviously stupid, it is your responsibility to let him know and work with him to make a better decision (or realize that maybe what he is doing isn't so stupid). You can't expect to be treated like a partner if you don't act like a partner.

Anyway, the two points I have are:

1) When you're in that situation where you're the developer of a 2-guy startup, assert your position as a partner or you won't be treated like one.

2) Remember that there are more options than "work as programmer for startup" or "work for big co" - namely, the "start your own startup" is a viable option. I think a lot of people forget about this when thinking about finding jobs.

Aug 23, 2010

I Have Sold My Soul

I feel I must publicly admit this here to you all. I have purchase some Apple products. I have an interest in making apps for the iPad, so I went out and bought one. However it turned out to be fairly useless for development on its own, an actual Mac is necessary. So I picked up a Mac Mini off eBay for dirt cheap and have started working with that.

So what are my thoughts on it? Well, my first thoughts are that it is a pain in the ass. It isn't the interface really (learning a new OS is always tricky, so I'm not really factoring that into my considerations). What I'm talking about is Apple itself. The system is not that old, it is running Tiger, but it is a bit annoying to get things from Apple for it. They seem adamant on trying to get you to purchase Snow Leopard at every turn (which I ended up having to do anyway, since XCode with the correct iOS SDK does not work with Tiger). At least with Windows stuff still works on XP (I can't believe I'm sticking up for Microsoft) without having Windows 7 being stuffed down your throat. However to even things out, Mac software upgrades are far cheaper than Windows ones, so it doesn't hurt that much paying for it if I am considering it as a potential business investment.

Anyway, it might be that I am just not used to the system yet, but I still haven't seen what all the Mac fanboys are raving about. The only thing that I do know at this point is that Apple has bothered me enough that I have absolutely zero desire to purchase any more of their products - although I may end up doing it anyway, depending on how well this whole iPad app thing goes.

What about my Touch Book? Well, I wasn't especially enamoured with it. It was a bit too sluggish for the things I wanted to do and since I bought the iPad I really had no more use for it. I hope the new owner is happy and may it serve him well.

Anyway you can expect a few Objective-C related posts coming up here in the near future.

So what are my thoughts on it? Well, my first thoughts are that it is a pain in the ass. It isn't the interface really (learning a new OS is always tricky, so I'm not really factoring that into my considerations). What I'm talking about is Apple itself. The system is not that old, it is running Tiger, but it is a bit annoying to get things from Apple for it. They seem adamant on trying to get you to purchase Snow Leopard at every turn (which I ended up having to do anyway, since XCode with the correct iOS SDK does not work with Tiger). At least with Windows stuff still works on XP (I can't believe I'm sticking up for Microsoft) without having Windows 7 being stuffed down your throat. However to even things out, Mac software upgrades are far cheaper than Windows ones, so it doesn't hurt that much paying for it if I am considering it as a potential business investment.

Anyway, it might be that I am just not used to the system yet, but I still haven't seen what all the Mac fanboys are raving about. The only thing that I do know at this point is that Apple has bothered me enough that I have absolutely zero desire to purchase any more of their products - although I may end up doing it anyway, depending on how well this whole iPad app thing goes.

What about my Touch Book? Well, I wasn't especially enamoured with it. It was a bit too sluggish for the things I wanted to do and since I bought the iPad I really had no more use for it. I hope the new owner is happy and may it serve him well.

Anyway you can expect a few Objective-C related posts coming up here in the near future.

Aug 3, 2010

Introducing L-Systems

My apologies, I haven't been writing as much these days; I've been having a bit of a blogger's block as to what to write about. I've got a few other statistics articles in mind, however since I enjoy variety I don't really want to write about the same thing all the time. So I'll reach out to the readers, is there any topic in particular you'd like me to write about?

Now that that is taken care of, we can get down to business. I've been doing a lot of reading these days, and one thing that I've stumbled upon are a nifty little computer science topic called L-systems. These things are quite simple to describe, yet are able to produce all sorts of interesting results (have you noticed a common theme to some of my blog posts these days?).

You need 3 things to describe an L-system. The first is an alphabet. An alphabet is very simple, it is just a set of characters that you will use within your alphabet - nothing more, nothing less. This is similar to a programming language where your alphabet is some subset of the ASCII character set unless you're using something more esoteric like APL (which is actually pretty neat, I've been looking at it a bit recently and I might put up a post about it soon - I guess I don't have blogger's block after all! You're still welcome to give requests though :) ).

The next thing you need for an L-system is an axiom. An axiom is simply a finite, non-empty string from your alphabet. You can also call it a seed if you like. The axiom (or seed) serves as the basis for the growth of the system.

The third thing is the most important - the production rules. What these do is map a character in the alphabet to some string. You apply the production rules on the axiom by converting each character in the axiom to whatever the rules say it maps to.

Let's consider an example L-system:

Let's run this L-system through one iteration. Begin with the axiom:

You may wonder why I picked this specific alphabet, as it looks rather peculiar. It turns out that this alphabet is directions for a turtle. The F means go forward, the + means turn left, and the - means turn right, all the while drawing a line.

Suppose we make the angle that the turtle turns equal to 60 degrees and iterate 4 times, you'll end up with a picture like this:

If you iterated this L-system an infinite number of times and at each iteration divide the distance that the turtle moves on each F by 3, you would end up with a fractal called the Koch snowflake (I made the mistake of saying that name out loud to my girlfriend, which caused her to giggle uncontrollably - perhaps I pronounced it wrong).

The next thing we can do is give our turtle an upgrade. In the current state, the turtle is limited to only drawing convoluted lines. While this is good, there are a great many interesting things to draw that aren't lines. We can change this by adding a powerful new feature to the turtle with these two symbols: [ and ]. The [ will push the current state of the turtle onto a stack (there is only one stack, so I should call it the stack), and ] will pop that state off of the stack and restore the turtle to that state.

This gives us a lot more power to create interesting images. Consider this L-system:

That's a bit cooler! And that image is generated by a completely deterministic process (aka no randomness). Which means that unlike my fractal trees, you will get exactly the same picture if you follow the rules above.

You can take these L-system things much much further, however I can see your eyes beginning to glaze over, so I will save that discussion for another day. As for code, you can see the L-system parser here, and the turtle graphics renderer here.

Now that that is taken care of, we can get down to business. I've been doing a lot of reading these days, and one thing that I've stumbled upon are a nifty little computer science topic called L-systems. These things are quite simple to describe, yet are able to produce all sorts of interesting results (have you noticed a common theme to some of my blog posts these days?).

You need 3 things to describe an L-system. The first is an alphabet. An alphabet is very simple, it is just a set of characters that you will use within your alphabet - nothing more, nothing less. This is similar to a programming language where your alphabet is some subset of the ASCII character set unless you're using something more esoteric like APL (which is actually pretty neat, I've been looking at it a bit recently and I might put up a post about it soon - I guess I don't have blogger's block after all! You're still welcome to give requests though :) ).

The next thing you need for an L-system is an axiom. An axiom is simply a finite, non-empty string from your alphabet. You can also call it a seed if you like. The axiom (or seed) serves as the basis for the growth of the system.

The third thing is the most important - the production rules. What these do is map a character in the alphabet to some string. You apply the production rules on the axiom by converting each character in the axiom to whatever the rules say it maps to.

Let's consider an example L-system:

Alphabet: F, +, - Axiom: F++F++F Rules: F -> F-F++F-F + -> + - -> -It is not necessary to specify the rules for + and -, since if you don't include them it is assumed that they just map to themselves (the identity function).

Let's run this L-system through one iteration. Begin with the axiom:

F++F++FThen apply the rules:

F-F++F-F++F-F++F-F++F-F++F-FI'd go for another iteration or two, but as you can see this thing will grow quickly and the text would probably run outside of this div. I'll just let you use your imagination as to what the next iteration would look like.

You may wonder why I picked this specific alphabet, as it looks rather peculiar. It turns out that this alphabet is directions for a turtle. The F means go forward, the + means turn left, and the - means turn right, all the while drawing a line.

Suppose we make the angle that the turtle turns equal to 60 degrees and iterate 4 times, you'll end up with a picture like this:

If you iterated this L-system an infinite number of times and at each iteration divide the distance that the turtle moves on each F by 3, you would end up with a fractal called the Koch snowflake (I made the mistake of saying that name out loud to my girlfriend, which caused her to giggle uncontrollably - perhaps I pronounced it wrong).

The next thing we can do is give our turtle an upgrade. In the current state, the turtle is limited to only drawing convoluted lines. While this is good, there are a great many interesting things to draw that aren't lines. We can change this by adding a powerful new feature to the turtle with these two symbols: [ and ]. The [ will push the current state of the turtle onto a stack (there is only one stack, so I should call it the stack), and ] will pop that state off of the stack and restore the turtle to that state.

This gives us a lot more power to create interesting images. Consider this L-system:

Alphabet: F, [, ], +, - Axiom: F Rules: F -> FF-[-F+F+F]+[+F-F-F]If you iterate this system 4 times, use an angle of 22.5 degrees and a step size of 6 pixels for your turtle, you get something like this (grabbed from The Algorithmic Beauty of Plants (The Virtual Laboratory)):

That's a bit cooler! And that image is generated by a completely deterministic process (aka no randomness). Which means that unlike my fractal trees, you will get exactly the same picture if you follow the rules above.

You can take these L-system things much much further, however I can see your eyes beginning to glaze over, so I will save that discussion for another day. As for code, you can see the L-system parser here, and the turtle graphics renderer here.

Jul 22, 2010

Biasedness and Consistency

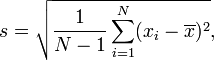

I remember back in those days when I was taking my first statistics class when this formula appeared (hotlinked from Wikipedia):

I thought to myself, "self, holy crap! That's nasty!" (there are actually a lot of nastier formulae, but I was young in my academic career and hadn't seen too many of them yet). At the time all I did was just memorize the formula and promptly forgot it after I had finished the course.

After I started taking economics and I had to take a lot more statistics, I started to wonder why this equation is the way it is. It turns out to be a bit interesting, and you can learn a bit about statistics when you answer this question.

The part that I thought was the weirdest is that when you take the standard deviation of the population you divide by n, however when you take it from a sample, you use n - 1. Why did the do this?

Let's go on an aside for a bit. When we are using a sample, these statistics that we are generating (the sample mean and the variance) are estimators of the actual parameters, based on the sample that we have taken. These two estimators are random variables, which means it is highly unlikely that they will be the same as the actual parameters. This is not a bad thing, however we would like to have some measures of "goodness" of the estimators that we have.

One measure of "goodness" is called unbiasedness. Intuitively we all know what a bias is, it is something that sorta skews the estimate away from the actual value that we are estimating. Formally you would call the estimator unbiased if:

Given the above definition, our variance estimator would be unbiased if:

So that's why you divide by n - 1 and not n. While that's almost enough statistics for you for one day, there is one last tidbit of information that can be taught here. If you look at the formula for the expected value of the biased estimator, you'll notice that the bias will shrink as n gets large. In fact in the limit, the bias will go to zero and you will have an unbiased estimator. The name for this type of estimator is a consistent estimator. While they are not as good as unbiased estimators (note that all unbiased estimators are consistent), it is often the case that you might want to use a biased yet consistent estimator instead of an unbiased one if perhaps there is something wrong with the variance of the unbiased estimator. Eventually in one of my statistics posts I will talk about some problems with real-world data that might cause this sort of thing to happen.

I thought to myself, "self, holy crap! That's nasty!" (there are actually a lot of nastier formulae, but I was young in my academic career and hadn't seen too many of them yet). At the time all I did was just memorize the formula and promptly forgot it after I had finished the course.

After I started taking economics and I had to take a lot more statistics, I started to wonder why this equation is the way it is. It turns out to be a bit interesting, and you can learn a bit about statistics when you answer this question.

The part that I thought was the weirdest is that when you take the standard deviation of the population you divide by n, however when you take it from a sample, you use n - 1. Why did the do this?

Let's go on an aside for a bit. When we are using a sample, these statistics that we are generating (the sample mean and the variance) are estimators of the actual parameters, based on the sample that we have taken. These two estimators are random variables, which means it is highly unlikely that they will be the same as the actual parameters. This is not a bad thing, however we would like to have some measures of "goodness" of the estimators that we have.

One measure of "goodness" is called unbiasedness. Intuitively we all know what a bias is, it is something that sorta skews the estimate away from the actual value that we are estimating. Formally you would call the estimator unbiased if:

E(estimator) = parameterThe E function there is the expected value of the random variable, which is essentially an average value that the random variable comes out to. If on average the estimator is not coming out to be our parameter, then we have some kind of bias going on.

Given the above definition, our variance estimator would be unbiased if:

E(s2) = σ2It turns out that if you divide by n for the sample variance you end up with:

E(s2) = &sigma2 * (n - 1) / nThis is always smaller than the variance, so our estimate is biased downwards. Thus we must correct the bias by dividing by n - 1 instead of n.

So that's why you divide by n - 1 and not n. While that's almost enough statistics for you for one day, there is one last tidbit of information that can be taught here. If you look at the formula for the expected value of the biased estimator, you'll notice that the bias will shrink as n gets large. In fact in the limit, the bias will go to zero and you will have an unbiased estimator. The name for this type of estimator is a consistent estimator. While they are not as good as unbiased estimators (note that all unbiased estimators are consistent), it is often the case that you might want to use a biased yet consistent estimator instead of an unbiased one if perhaps there is something wrong with the variance of the unbiased estimator. Eventually in one of my statistics posts I will talk about some problems with real-world data that might cause this sort of thing to happen.

Jul 19, 2010

Science Vs. Faith

Jul 15, 2010

The Barnsley Fern

The guy who coined the name "fractal", Benoît Mandelbrot, really liked fractals because he believed - or I should say believes since he's still alive (UPDATE: I am extremely disappointed to say that as of Oct. 14, 2010 this is no longer true) - that nature is fractal in nature (no pun intended). He said that many things in the world do not fit into our standard notion of geometry with lines, spheres, cubes, etc. and instead take on a bit more sophisticated form - fractals.

One thing is for sure, many of the fractals that have been discovered are incredibly similar to real life. An example is Barnsley's Fern, a fractal generated from an iterated set of functions that looks an awful lot like ferns we see in nature. Here's a picture for you:

This image is generated using the following algorithm:

It's truly amazing how something so familiar can be generated using such a simple formula. I'm hoping to dig up some more things like this and hopefully post them here for all of you to see.

One thing is for sure, many of the fractals that have been discovered are incredibly similar to real life. An example is Barnsley's Fern, a fractal generated from an iterated set of functions that looks an awful lot like ferns we see in nature. Here's a picture for you:

This image is generated using the following algorithm:

x, y = 0.0, 0.0 loop until satisfied: draw x, y x, y = random_func(x, y)What random_func() does is applies a random linear transformation to the pair (x, y), chosen from a group of 4 possible transformations each with a specific probability. You can see the transformations on the Wikipedia page, or in my version of the code here. If you don't feel like going that far, a linear transformation is just where you take the vector (x, y) - let's call it v - multiply by a matrix and then add a constant vector:

v = A * v + aIn the fern drawing you would choose a random transformation, which is just a pair with a matrix and a vector to plug into the above formula. Different transformations will give you different shapes of the fern, feel free to play around and see what you get.

It's truly amazing how something so familiar can be generated using such a simple formula. I'm hoping to dig up some more things like this and hopefully post them here for all of you to see.

Jul 11, 2010

Installing Swarm in Ubuntu

I've been hoping to use this software called Swarm, which is a modelling framework used for multiple-agent simulations. Unfortunately the code they have easily available on their site doesn't work on my 64-bit Ubuntu machine, and their Ubuntu instructions are a bit outdated! However, Paul Johnson of the University of Kansas has created some debs available to use. To install, just use this code:

sudo apt-get install libhdf4-dev libhdf5-serial-dev gobjc libobjc2 mkdir swarm-install cd swarm-install wget http://pj.freefaculty.org/Ubuntu/10.04/amd64/blt/blt_2.4z-5_amd64.deb wget http://pj.freefaculty.org/Ubuntu/10.04/amd64/blt/blt-dev_2.4z-5_amd64.deb wget http://pj.freefaculty.org/Ubuntu/10.04/amd64/swarm/libswarm0_2.4.0-1_amd64.deb wget http://pj.freefaculty.org/Ubuntu/10.04/amd64/swarm/libswarm-dev_2.4.0-1_amd64.deb sudo dpkg -i blt_2.4z-5_amd64.deb sudo dpkg -i blt-dev_2.4z-5_amd64.deb sudo dpkg -i libswarm0_2.4.0-1_amd64.deb sudo dpkg -i libswarm-dev_2.4.0-1_amd64.debThis will install Swarm. You can grab some sample apps here. One issue though, when compiling the apps they expect a basic Makefile to be installed at /usr/etc/swarm, which is not a folder. To fix this you need to tweak the Makefile. Open the Makefile for the project you want to build and change the line that looks like this:

include $(SWARMHOME)/etc/swarm/Makefile.applto this:

include /etc/swarm/Makefile.applAfter that the apps should compile just fine, provided your system can compile Objective-C apps (I included gobjc there in the list, it should work just fine).

Jun 24, 2010

If Cantor Were A Programmer...

When you take math in university, one thing you'll probably come across is the concept of countability. This means that given a set, you can find some way of assigning a natural number to each element of that set - you can count the elements in it.

This guy Cantor discovered in the late 19th century that there are different types of infinities. He did this by analyzing various sets of numbers and discovered that certain infinite sets are in fact "bigger" than other infinite sets. Which is kinda weird, but it works.

I thought it might be fun (my definition of fun is apparently kinda weird) to see what would happen if instead of a mathematician Cantor were a programmer, and what his proof might look like.

Let's take a hypothetical language called C∞, which is kinda like C except that it runs on our magical computer that has an infinite amount of RAM, and thus the integer types in C∞ have infinite precision.

In C∞, we say a type T is countable if we can construct a function like this:

1) For every possible unsigned int value n foo() will return a T - this means no exceptions, no crashes, etc.

2) For every possible T value t, there is exactly one unsigned int n1 such that:

What are some countable types? Well, signed int is countable. Here's a function that will work:

This turns out to be a one-to-one function, so therefore the signed ints are countable.

It also turns out that signed int pairs are also countable. Suppose we have a type that looks like this:

What about the real numbers though? This one is a bit trickier. Let's represent a real number like this:

Now we show that the reals are not countable using a contradiction. First, we assume that the reals are in fact countable. Let's simplify it a little and only work with the real numbers between 0 and 1, so we'll have a real0 type that is the same as real but with the integerPart field set to 0.

So since real0 is countable (by assumption), it means that we can create a one-to-one function like this:

What does this tell us? Well, we know that with our infinite precision computer there are an infinite number of possible unsigned ints we could have. At the same time we have shown that there is not a one-to-one mapping between the reals and the unsigned ints, so therefore the infinity of the reals is effectively "bigger" than the infinity of the unsigned ints.

Why would a programmer care? Well, this has an implication for computer science. But first, let's ask another question - if there are real0 numbers missing from all_the_reals, how many are there? Is there just one? Or even a finite number? Well, no, and here's why. Suppose there are a finite number of missing real0s. We could then create an array of them:

This guy Cantor discovered in the late 19th century that there are different types of infinities. He did this by analyzing various sets of numbers and discovered that certain infinite sets are in fact "bigger" than other infinite sets. Which is kinda weird, but it works.

I thought it might be fun (my definition of fun is apparently kinda weird) to see what would happen if instead of a mathematician Cantor were a programmer, and what his proof might look like.

Let's take a hypothetical language called C∞, which is kinda like C except that it runs on our magical computer that has an infinite amount of RAM, and thus the integer types in C∞ have infinite precision.

In C∞, we say a type T is countable if we can construct a function like this:

T foo(unsigned int n)Where foo() satisfies:

1) For every possible unsigned int value n foo() will return a T - this means no exceptions, no crashes, etc.

2) For every possible T value t, there is exactly one unsigned int n1 such that:

foo(n1) == tWe call functions like this one-to-one.

What are some countable types? Well, signed int is countable. Here's a function that will work:

int foo(unsigned int n){

if (n % 2 == 0){

return n / 2;

}else{

return -(n + 1) / 2;

}

}If you start from 0 and continue, this function will produce 0, -1, 1, -2, 2, -3, ...This turns out to be a one-to-one function, so therefore the signed ints are countable.

It also turns out that signed int pairs are also countable. Suppose we have a type that looks like this:

struct pair {

int a, b;

};This type is also countable. The proof is fairly simple when you use a picture, see here for a nice one that is already done. It uses rational numbers, which are a subset of pairs of integers.What about the real numbers though? This one is a bit trickier. Let's represent a real number like this:

struct real {

int integerPart;

bit decimal[∞];

};What we're doing here for the decimal part is representing it as an infinite series of 1s and 0s. Since we're all computer geeks here, we know that a binary string is just as capable of representing a number as a decimal string, so we still are able to represent any real number using the above type.Now we show that the reals are not countable using a contradiction. First, we assume that the reals are in fact countable. Let's simplify it a little and only work with the real numbers between 0 and 1, so we'll have a real0 type that is the same as real but with the integerPart field set to 0.

So since real0 is countable (by assumption), it means that we can create a one-to-one function like this:

real0 foo_real(unsigned int n)Since this function is one-to-one, for every possible real0 value x there is an unsigned int n that will produce x when you call foo_real(n). So let's create an array that has all those real numbers in it:

real0 all_the_reals[∞];

for (unsigned int i = 0; i < ∞; i++){

all_the_reals[i] = foo_real(i);

}Now let's cause a problem. Consider the following code:real0 y;

for (unsigned int i = 0; i < ∞; i++){

y.decimal[i] = !all_the_reals[i].decimal[i];

}Why is this a problem? Well, we assumed that all possible values for real0 are in all_the_reals. But for any i, we know that y != all_the_reals[i] because the bit at the ith decimal place is inverted. Therefore we know that y is not in all_the_reals, which means that all_the_reals does not contain all possible real0 values. Therefore there is a real0 value that cannot be produced by foo_real() — a contradiction since we assumed foo_real() is one-to-one. So real0 is not countable, and since real0 is a sub-type of real, real is also not countable.What does this tell us? Well, we know that with our infinite precision computer there are an infinite number of possible unsigned ints we could have. At the same time we have shown that there is not a one-to-one mapping between the reals and the unsigned ints, so therefore the infinity of the reals is effectively "bigger" than the infinity of the unsigned ints.

Why would a programmer care? Well, this has an implication for computer science. But first, let's ask another question - if there are real0 numbers missing from all_the_reals, how many are there? Is there just one? Or even a finite number? Well, no, and here's why. Suppose there are a finite number of missing real0s. We could then create an array of them:

real0 missing_reals[N];However we could then still create a one-to-one function:

real0 foo_real_with_missing(unsigned int n){

if (n < N){

return missing_reals[n];

}else{

return foo_real(n - N);

}

}So there must be an infinite number of missing real0s. Are the missing ones here countable? Assume that they are, and create another one-to-one function foo_real2() which gets all these missing real0s. But then we could still create a one-to-one that will grab all the reals returned by foo_real and foo_real2:real0 foo_real_combined(unsigned int n){

if (n % 2 == 0){

return foo_real(n / 2);

}else{

return foo_real2((n - 1) / 2);

}

}So even the real0s that are missing from foo_real() are uncountable. Crap! In fact based on this we know that even the reals missing from the combination of foo_real() and foo_real2() is uncountable. If we create another one foo_real3() which grabs the real0s outside of foo_real_combined(), it is still uncountable. No matter how many functions we create, they will still not capture all the real0s.